Meta’s latest AI model collection brings game-changing innovations to multimodal intelligence

Introduction: A New Era of AI Models Has Arrived

On April 5th, 2025, Meta unveiled what might be its most significant AI breakthrough yet—the Llama 4 collection. This isn’t just another incremental update; it represents a fundamental architectural shift that could reshape how we think about and use AI systems. By combining a revolutionary Mixture of Experts (MoE) approach with native multimodality, Meta has created models that are not only more powerful but dramatically more efficient than previous generations.

As AI enthusiasts and industry watchers digest this major announcement, one thing becomes clear: Llama 4 marks the beginning of what Meta calls “a new era of natively multimodal intelligence.” But what exactly makes these models special, and how might they impact the AI landscape moving forward?

In this comprehensive guide, I’ll break down everything you need to know about Meta’s Llama 4 collection—from its innovative architecture to its impressive capabilities and what they mean for developers, businesses, and everyday users.

The Llama 4 Collection: Meet the Herd

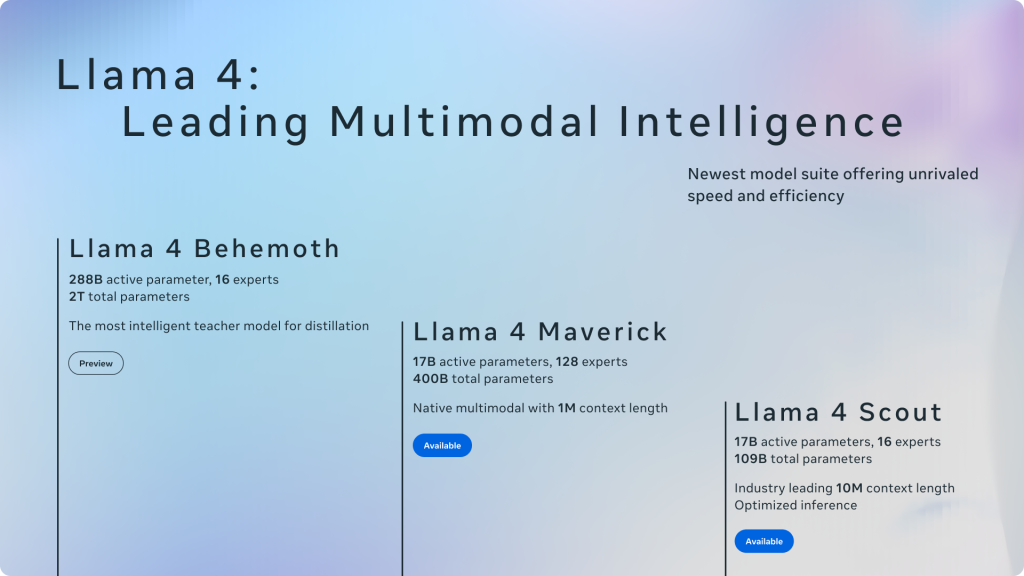

Meta has released two models from the Llama 4 family, with a third still in training, each offering different capabilities to serve various needs:

Llama 4 Scout: The Accessible Pioneer

Scout serves as the entry point to the Llama 4 collection and brings impressive multimodal capabilities in a relatively smaller package:

- 17 billion active parameters distributed across 16 experts

- Total parameter count: 109 billion

- State-of-the-art performance for its class

- More powerful than previous Llama 3 models

- Groundbreaking 10 million token context window

This enormous context window is particularly noteworthy—it’s one of the first open models offering this capability, enabling entirely new categories of applications.

Llama 4 Maverick: The Current Flagship

Maverick represents Meta’s current flagship offering in the collection:

- 17 billion active parameters spread across 128 experts

- Total parameter count: 400 billion

- “Unparalleled, industry-leading performance” in image and text understanding

- Comparable to models like GPT-4o and Gemini 1.0 Flash

- Support for 12 languages, enabling sophisticated multilingual applications

Maverick is positioned as “the workhorse model for general assistant and chat use cases,” with excellent capabilities for precise image understanding and creative writing.

Llama 4 Behemoth: The Coming Giant

The third model, Llama 4 Behemoth, remains in development but is already generating significant buzz:

- According to Meta CEO Mark Zuckerberg, it’s positioned to be “the most efficient base model globally” once completed

- Suggests Meta is preparing to challenge the largest and most powerful models from competitors like OpenAI and Anthropic

- Being pre-trained using FP8 precision on 32K GPUs with an impressive 390 TFLOPs per GPU

While details remain limited, the name alone suggests Meta has ambitious plans for this model’s capabilities.

Revolutionary Architecture: Why Llama 4 Changes the Game

The most significant leap forward in Llama 4 lies in its architectural innovations, which represent a fundamental departure from previous approaches.

Mixture of Experts: The Efficiency Breakthrough

Llama 4 marks Meta’s first implementation of the Mixture of Experts (MoE) architecture, and this design choice fundamentally transforms how the model operates:

How Traditional Dense Models Work:

- All parameters are activated for every token processed

- Requires substantial compute resources for both training and inference

- Limited scaling potential due to computational constraints

How MoE Models Work:

- Consists of specialized neural networks (the “experts”)

- Includes a “router” component that directs incoming tokens to appropriate experts

- Only a fraction of total parameters are activated for each token

- Substantially more compute-efficient for both training and inference

The practical benefits of this approach are substantial. For example, when Llama 4 is asked to write code, it activates only the coding experts while leaving other experts dormant. This specialized approach delivers faster responses while maintaining high intelligence and performance.

Given a fixed training computational budget, MoE architectures can deliver higher quality models compared to traditional dense architectures. This means Meta can create more powerful models without proportional increases in computational requirements—a crucial factor for sustainable AI development.

Native Multimodality with Early Fusion

Unlike Llama 3.2, which used separate parameters for text and vision, Llama 4 incorporates early fusion to seamlessly integrate text and vision tokens into a unified model backbone. This architectural decision has significant implications:

- Enables joint pre-training with large amounts of unlabeled text, image, and video data

- Creates more coherent reasoning across text and images

- Allows the model to “think” in a truly multimodal way rather than translating between separate text and image understanding systems

Meta has also improved the vision encoder in Llama 4, building upon MetaCLIP but training it separately in conjunction with a frozen Llama model to better adapt the encoder to the large language model. This enhancement contributes to the model’s improved performance in visual understanding tasks.

[Insert Image: Visual diagram showing the early fusion approach in Llama 4 vs. the separate parameters in Llama 3.2 – Alt text: “Diagram comparing Llama 4’s early fusion approach to Llama 3.2’s separate parameters for text and vision processing”]

Technical Specifications: Inside Llama 4’s Capabilities

Unprecedented Context Window

One of the most remarkable features is Scout’s massive context window of up to 10 million tokens—making it one of the first open models with this capability. This extraordinary context length enables:

- Processing entire codebases at once

- Summarizing multiple documents simultaneously

- Maintaining longer, more coherent conversations with comprehensive context

- Building sophisticated RAG (Retrieval Augmented Generation) systems that can incorporate vast amounts of contextual information

This represents a quantum leap from previous models’ context windows, which typically ranged from a few thousand to a few hundred thousand tokens at most.

Advanced Training Methodology

The training methodology for Llama 4 includes several innovations:

- MetaP Technique: A novel approach that allows Meta to reliably set critical model hyper-parameters such as per-layer learning rates and initialization scales. These chosen hyper-parameters transfer well across different values of batch size, model width, depth, and training tokens, enabling more consistent and reliable model performance.

- Efficient Training: Meta focused on efficient model training by using FP8 precision without sacrificing quality, ensuring high model FLOPs utilization.

- Massive Data Scale: The overall data mixture for training consisted of more than 30 trillion tokens—more than double the Llama 3 pre-training mixture—and included diverse text, image, and video datasets.

Enhanced Multilingual Capabilities

Llama 4 significantly expands multilingual capabilities:

- Pre-training on 200 languages

- Over 100 languages with more than 1 billion tokens each

- Represents a tenfold increase in multilingual tokens compared to Llama 3

- Llama 4 Maverick specifically supports 12 languages

This multilingual focus enables the creation of sophisticated AI applications that bridge language barriers, making advanced AI more accessible globally.

Real-World Applications: What Can You Build with Llama 4?

The architectural innovations and enhanced capabilities of Llama 4 open up new possibilities for AI applications across domains:

1. Advanced Document Processing and Analysis

With its 10-million token context window, Llama 4 can process and analyze entire document collections at once:

- Legal contract analysis across hundreds of documents

- Academic research synthesis spanning thousands of papers

- Comprehensive business intelligence from extensive reports and databases

2. Sophisticated Visual Understanding

Thanks to its native multimodality with early fusion:

- More accurate image captioning and visual question answering

- Enhanced visual reasoning for robotics and autonomous systems

- Better medical image analysis and diagnostic assistance

- More natural visual conversations in assistant applications

3. Enterprise Knowledge Systems

The combination of massive context windows and improved reasoning capabilities enables:

- Creation of enterprise-wide knowledge graphs with deep contextual understanding

- Development of more effective internal search and recommendation systems

- Building corporate memory systems that maintain coherence across vast knowledge bases

4. Enhanced Creative Tools

Llama 4’s improvements particularly benefit creative applications:

- More coherent long-form content generation

- Better visual creative assistance for designers and artists

- Enhanced multimodal storytelling applications

- More sophisticated AI-assisted design tools

5. Personalized Education Systems

The improved reasoning and multimodal capabilities support:

- More personalized education systems that adapt to individual learning styles

- Better visual explanations of complex concepts

- More coherent long-form educational content

- Enhanced tutoring experiences across diverse subjects

Deployment and Availability: How to Access Llama 4

Meta has made Llama 4 Scout and Llama 4 Maverick available through several channels:

- Direct download via llama.com

- Through Hugging Face

- Via cloud platforms like Groq, which promises high performance at low cost

- Already powering Meta AI across various platforms, including WhatsApp, Messenger, Instagram Direct, and the Meta.AI website

However, there are notable restrictions in the availability of Llama 4 models:

- Individuals and businesses based in the European Union are barred from utilizing or distributing these models “due to the unpredictable nature of the European regulatory environment”

- This decision appears related to concerns about complying with the EU’s GDPR data protection law rather than the forthcoming AI Act

- Enterprises with over 700 million monthly active users must obtain a special license from Meta

These availability constraints reflect the complex regulatory landscape surrounding AI development and deployment globally.

Performance and Capabilities: How Good Is It Really?

Llama 4 models demonstrate significant improvements over previous generations in both capabilities and efficiency:

- Efficiency Gains: Compared to Llama 3.1/3.2, the shift to MoE architecture provides better performance with more efficient resource utilization

- Multimodal Coherence: The early-fusion approach to multimodality delivers more coherent reasoning across text and images than the separate parameters used in Llama 3.2

- Safety Improvements: Maverick is optimized for response quality on tone and appropriate refusals, suggesting improvements in safety and alignment

Meta has stated that they aim to develop the most helpful, useful models for developers while protecting against and mitigating severe risks. This includes integrating mitigations at each layer of model development from pre-training to post-training, as well as tunable system-level mitigations that protect developers from adversarial users.

The massive context window of 10 million tokens in Scout enables entirely new application categories that were previously impossible. This includes sophisticated RAG systems that can incorporate vast amounts of contextual information and maintain coherence across extensive interactions.

The Road Ahead: Future Developments for Llama 4

Meta has indicated that the current release is “just the beginning for the Llama 4 collection.” They believe that the most intelligent systems need to be capable of:

- Taking generalized actions

- Conversing naturally with humans

- Working through challenging problems they haven’t encountered before

The company plans to share more about their vision at the upcoming LlamaCon event on April 29, 2025. This suggests that Meta has a broader roadmap for Llama 4 that extends beyond the current model releases.

The continued development of Llama 4 Behemoth will likely result in an even more powerful model that pushes the boundaries of what’s possible with current AI technology. Meta’s emphasis on efficiency suggests they’re focused not just on raw capability but on making these advanced models accessible and practical for widespread deployment.

Industry Impact: How Llama 4 Changes the AI Landscape

The release of Llama 4 has significant implications for the broader AI industry:

Democratizing Advanced AI Capabilities

By making these powerful models available through multiple channels, Meta continues to democratize access to cutting-edge AI capabilities. This stands in contrast to some competitors who keep their most advanced models behind closed APIs.

Pushing Architectural Innovation

The adoption of MoE architecture represents a meaningful shift in how large language models are built. If Llama 4 proves successful, we may see other major AI labs follow suit with similar approaches.

Setting New Efficiency Standards

Meta’s focus on efficiency rather than just raw scale challenges the industry narrative that bigger is always better. This could drive a more sustainable approach to AI development industry-wide.

Accelerating Multimodal Development

The native multimodality with early fusion puts pressure on other AI labs to improve their multimodal offerings, potentially accelerating development in this area.

Conclusion: A Genuine Leap Forward in AI Development

Llama 4 represents a genuine advancement in open-source AI model architecture. The introduction of MoE architecture combined with early-fusion multimodality creates models that are not just more efficient but qualitatively better for many applications. The significant increases in context window size, multilingual capabilities, and multimodal understanding suggest that Meta is positioning Llama 4 as a comprehensive solution for a wide range of AI applications.

While regulatory challenges in regions like the EU present obstacles to global adoption, Meta’s commitment to making these models widely available through multiple channels demonstrates their continued belief in the value of open innovation in AI development. As companies and developers begin to build with these new models, we can expect to see novel applications that leverage their unique capabilities across domains from creative content generation to sophisticated business applications.

The AI landscape continues to evolve at a breakneck pace, but Llama 4 stands out as more than just an incremental improvement—it represents a fundamental rethinking of how large AI models are designed and deployed. As we wait for more details about the upcoming Behemoth model and Meta’s broader vision for AI, one thing is clear: Llama 4 has raised the bar for what we should expect from the next generation of AI systems.

Want to learn more about developments in AI? Follow our AI Category for the latest news!

For developers interested in working with Llama models, visit Meta’s official Llama documentation for detailed implementation guides and resources.